AI Governance

Govern all AI assets from one place. Centralize oversight across all AI – agentic, generative, and predictive – regardless of where assets are built or deployed. Manage and monitor seamlessly via the UI or API, with built-in metrics, guards, and real-time interventions.

AI governance across across cloud, private cloud, hybrid, on-prem, and edge environments

Your choice of DataRobot UI or code-first

Central hub for models, LLMs, agents, tools, apps, and vector databases

Deploy anywhere from DataRobot

Automate adherence to all compliance standards. Reduce compliance risk with automated testing during development and continuous compliance assessment in production. Save time with one-click, customizable documentation for both generative and predictive AI deployments.

AI compliance: EU AI Act, NYC Law No. 144, Colorado Law SB21-169, California Law AB-2013, SB-1047

Standards & guidelines: EEOC AI Guidance, DIU Responsible AI Guidelines

AI management frameworks: NIST AI RMF, SR-117

Industry best practices such as The Data & Trust Alliance

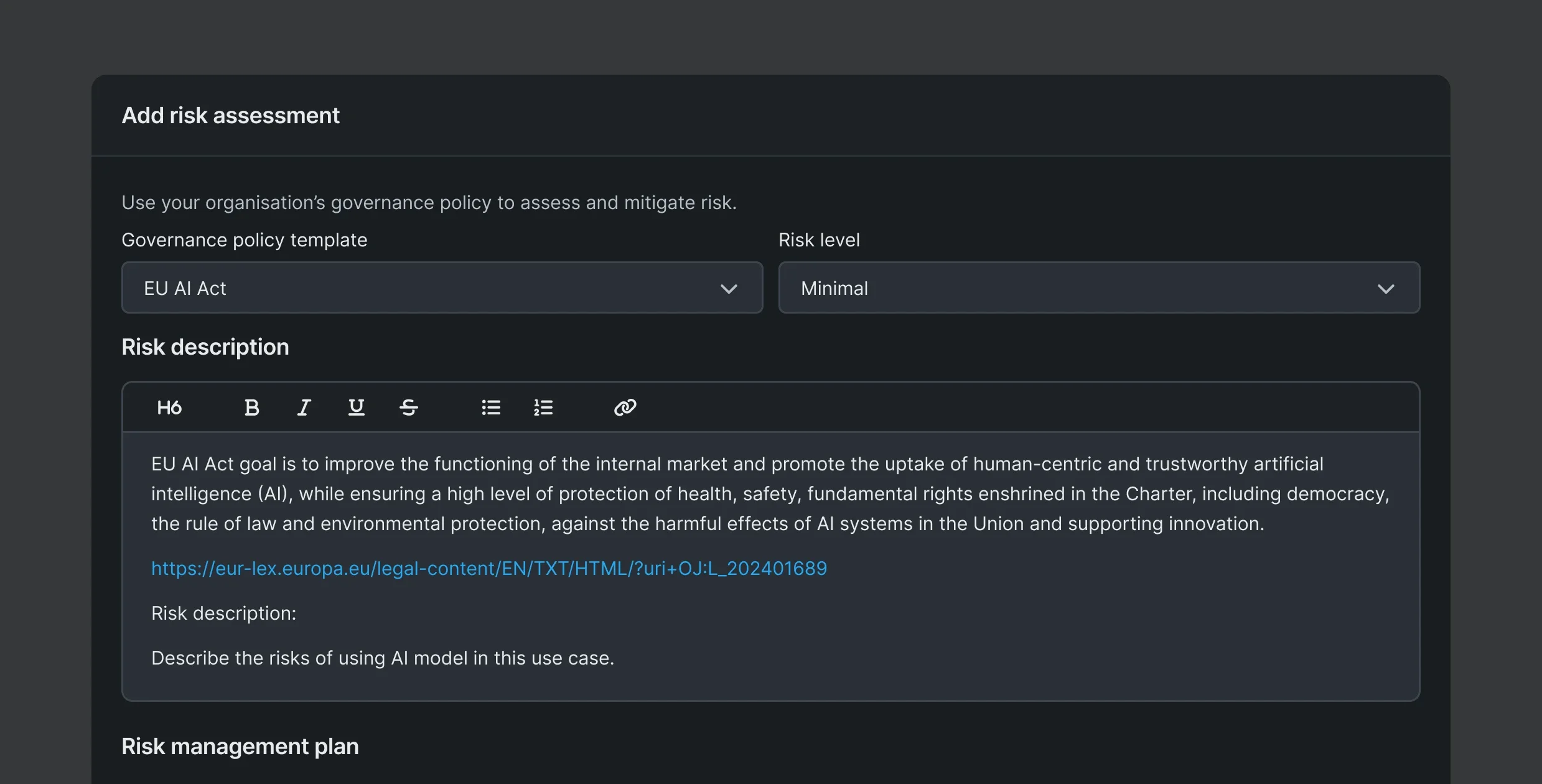

Reduce AI risk and enforce policies. Minimize risk and uphold MRM policies across every AI project. Use built-in frameworks (EU AI Act, NIST, or custom) and easily capture and store audit-ready evidence at every step. Enforce consistent governance policies to any AI asset with customizable gold-standard shields, and standardized approval workflows.

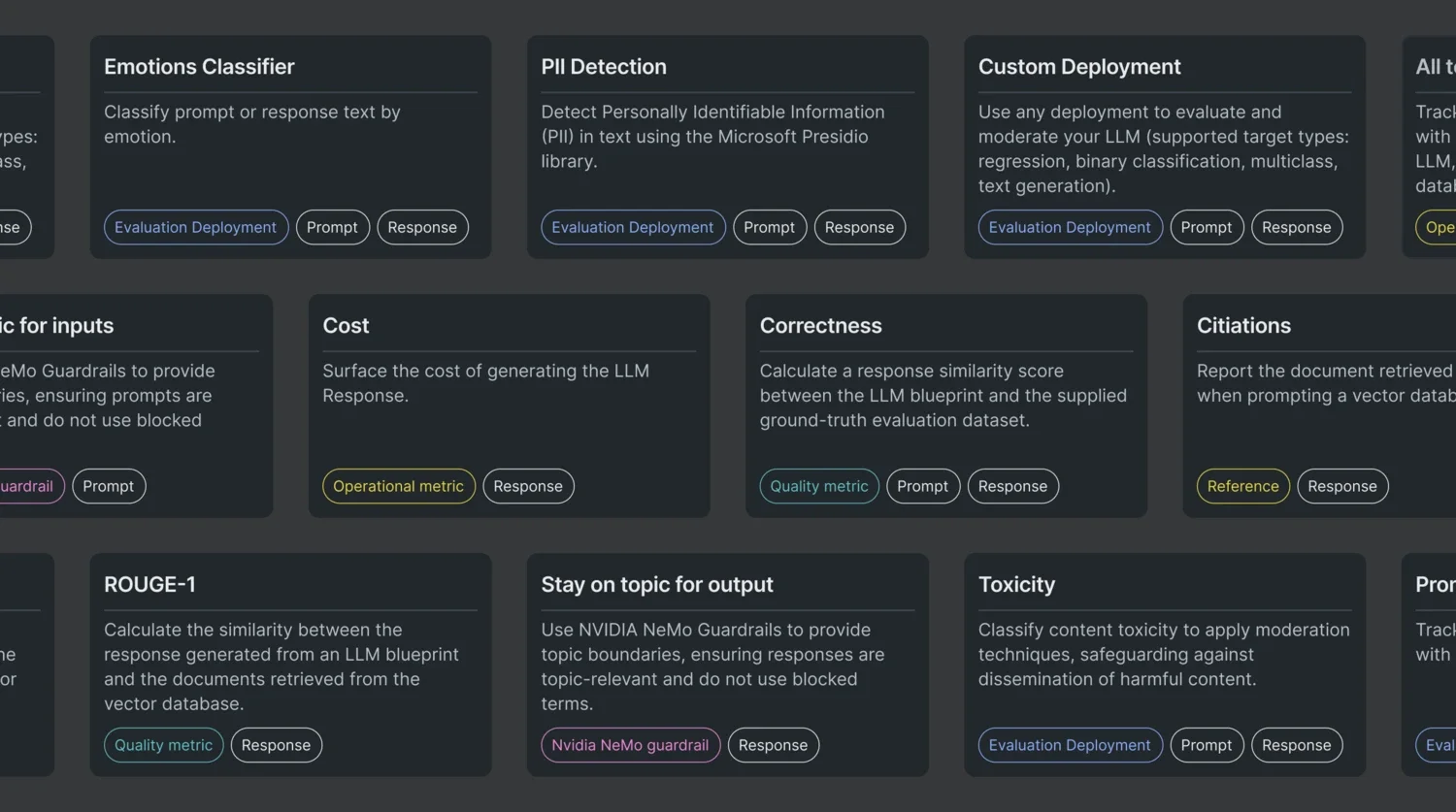

Real-time intervention and moderation. Protect your AI deployments from OWASP-identified vulnerabilities like PII leakage, prompt injection, and hallucinations using a full library of shields and guards. Tap into a suite of ready-to-use and customizable guards from NVIDIA, Microsoft, and others to monitor, detect, and resolve threats – before they impact your business.

PII leakage, privacy infringement

Veering off-topic, hallucinations, off-policy

Toxicity, bias, disinformation

Rouge-1, faithfulness

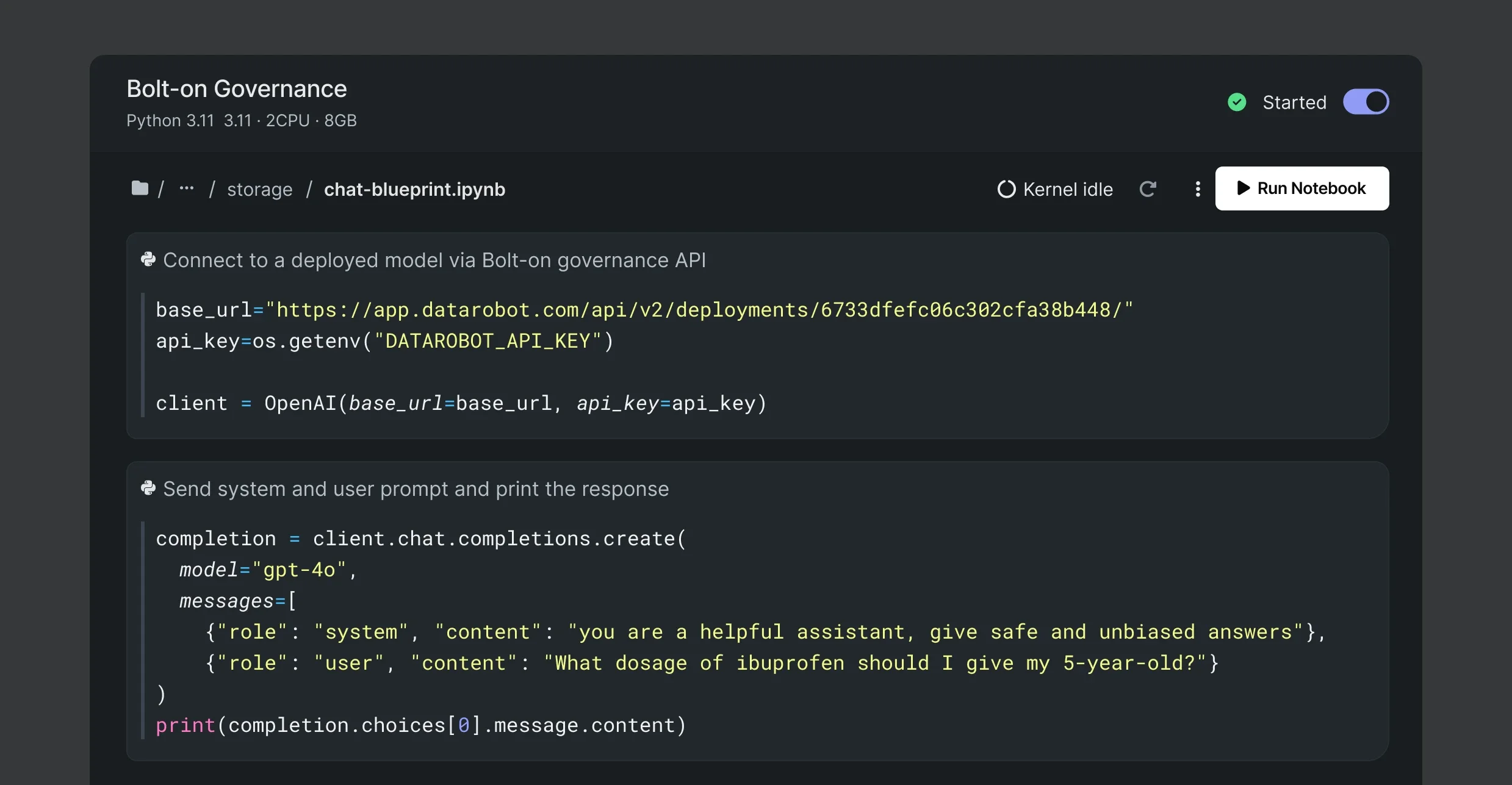

Protect any AI asset, built anywhere by anyone. Secure and govern any AI asset—models, agents, or applications—no matter where it’s hosted, with just two lines of code. Apply real-time monitoring and moderation with comprehensive governance policies and custom metrics. Automate these defenses to ensure robust protection across all your deployments.

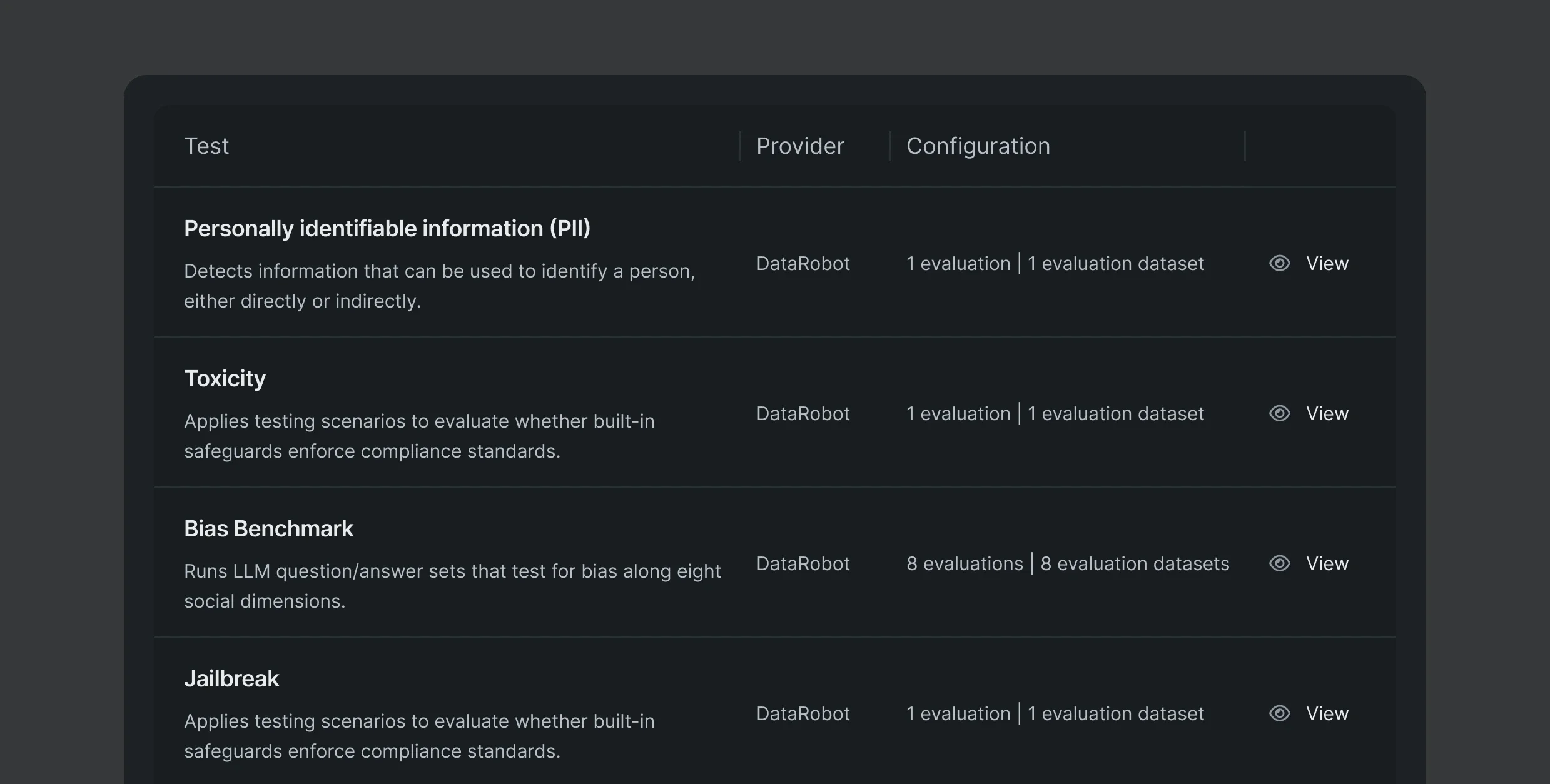

Pre-deployment AI red-teaming. Ensure your models are robust and secure by red-teaming your AI before deployment. Test with synthetic or custom datasets to spot jailbreaks, bias, inaccuracies, toxicity, and compliance issues to identify and address vulnerabilities early.

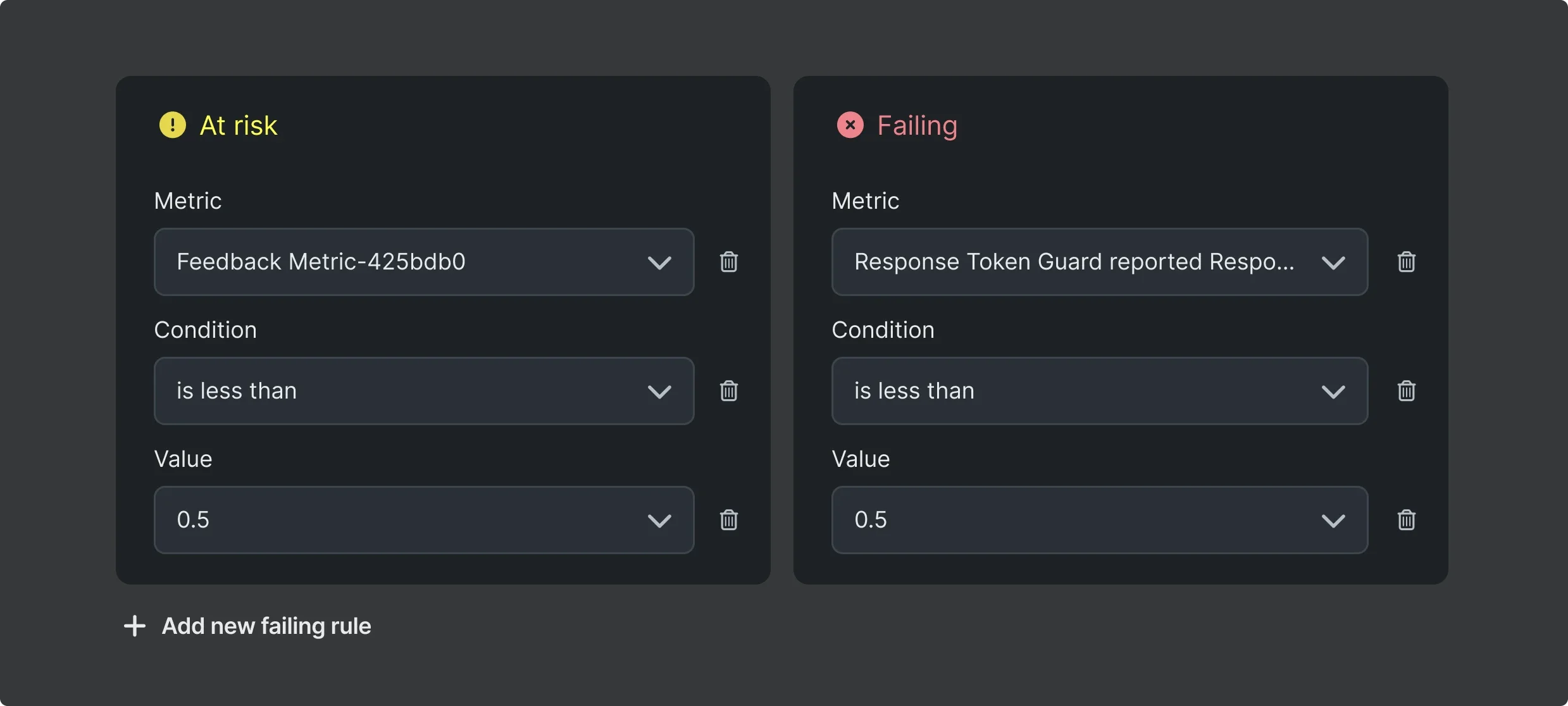

Custom alerts and notification policies. Get real-time metrics and customized alerts in your data science applications or SIEM tools so the right teams can act fast.

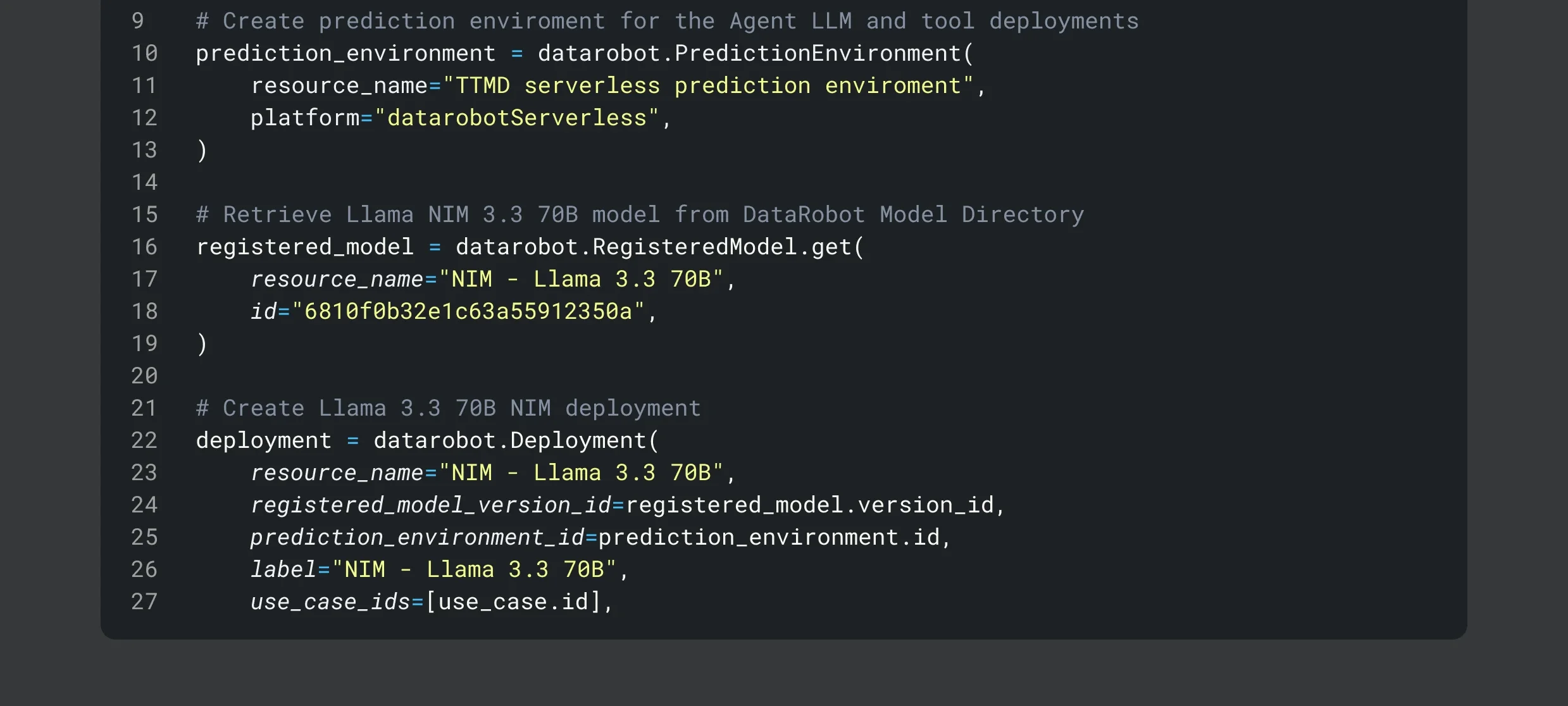

Reduce deployment complexity. Deploy predictive and generative AI models, agents, and apps faster from a single organized registry. Automatically package pipelines, vector databases, and prompting strategies into a production-ready REST API with one click.

Cloud deployment

Edge Deployment

Embed into business applications

On-prem and air-gapped

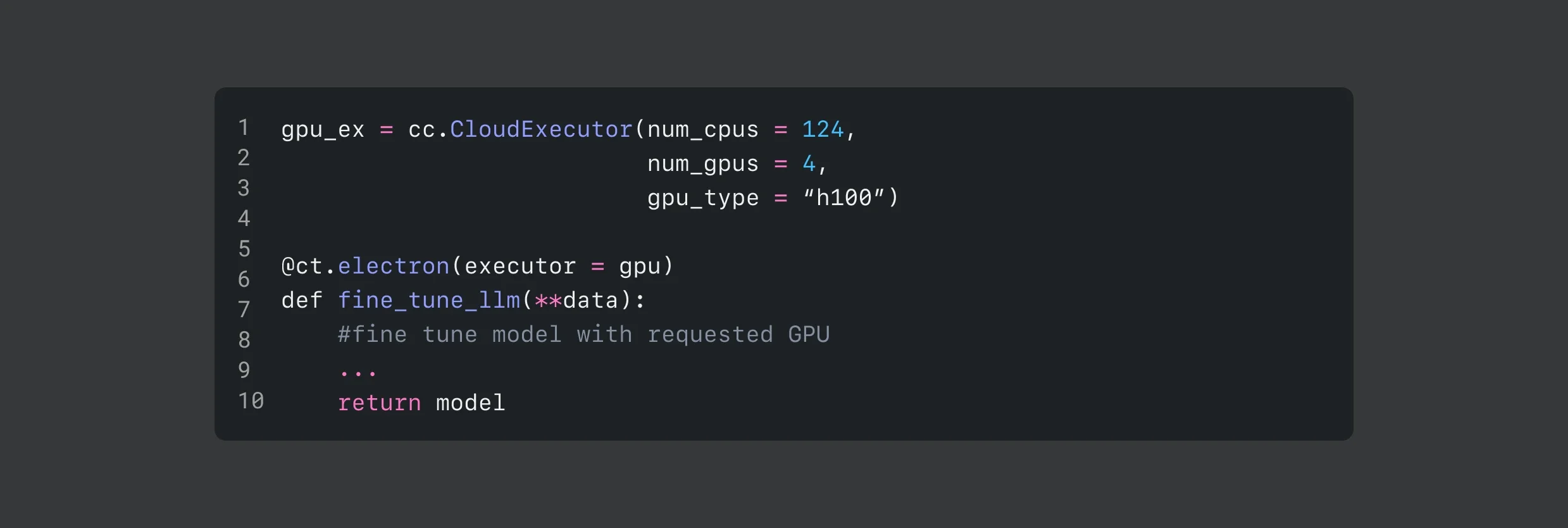

Optimize compute resources in real time. Maximize performance and cost efficiency with a serverless environment that automates compute provisioning and deallocation to eliminate waste. Simply set priorities like cost, latency, or availability, and skip the DevOps overhead. Accelerate vector database updates, improve guard performance, and reduce latency without manual adjustments.

Deploy agents with NVIDIA. Skip the setup. Eliminate manual complexity and rapidly deploy pre-configured and GPU-optimized NVIDIA NIM using the new NVIDIA NIM Gallery. Customize any DataRobot agentic app to leverage NIM microservices, and secure all your AI apps with NeMo guardrails.

Orchestrate AI pipelines at scale. Whether you’re running batch jobs or real-time apps, ensure uninterrupted performance at every stage of the pipeline. Use native Airflow integration to run and monitor batch workflows. Rapidly set up real-time workflows using pre-built customizable blueprints and optimize using dynamic compute orchestration.